Re: RFC: methods of sched event tracing and my assumptions

From: Darren Hart

Date: Mon Aug 31 2009 - 19:01:17 EST

Darren Hart wrote:

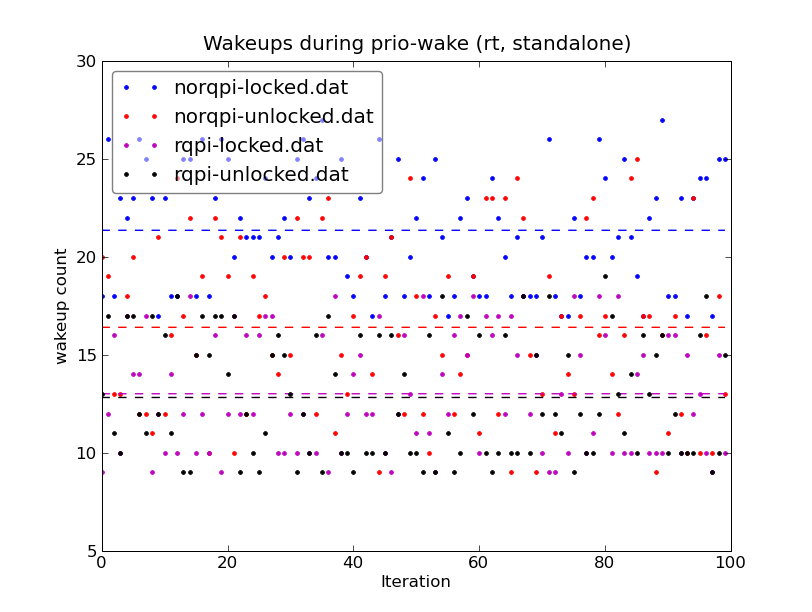

I'm trying to determine how Requeue PI affects pthread_cond_broadcast()

when using a PI mutex. This boils down to doing a FUTEX_REQUEUE

(requeue pi) instead of a FUTEX_WAKE(all) (without requeue pi). With

FUTEX_WAKE(all), every thread is woken on the broadcast and then goes

back to sleep to cycle through acquisition of the contended mutex. With

FUTEX_REQUEUE, only one task (at most, iff the mutex is uncontended

across the broadcast, which it normally is not) is woken at the time of

the broadcast, and the rest are simply requeued to be woken when it is

there turn to acquire the mutex.

At a minimum I would expect to see NR_THREADS (where NR_THREADS is the

number of threads queued on the condvar/futex at the time of the

broadcast) fewer "wakeups" when FUTEX_REQUEUE is used.

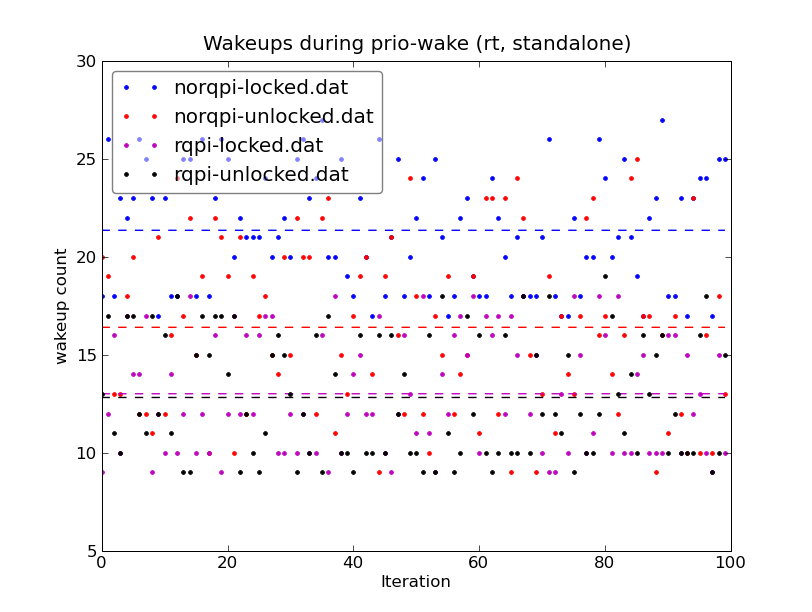

I have included two plots, one running on 2.6.31-rc8 (linus) and one

running on tip/rt/head as of Aug 24. The min86 refers to the threads of

prio-wake having a priority range of 86-93 rather than 1-8. I made this

change thinking the low priority threads might be seeing additional

scheduling activity due to the higher priority kernel threads in the rt

kernel and the extra preemption points. This change didn't have a

significant impact.

I was surprised to see the average for rqpi-locked (the case I care the

most about) to jump from an average of 17 wakeups on linus to 40 on rt.

I would greatly appreciate a review of my methods of data collection to

ensure my definition of "wakeup" is sane.

I'm collecting 100 traces of prio-wake runs (from LTP

testcases/realtime/func/prio-wake) as follows:

After some gentle resistance to CVS and non-single-file testcases, I

prepared a standalone prio-wake-min.c. It ran to completion on linus

with slightly fewer wakeups than the LTP version (I removed a number of

things that weren't strictly necessary for this specific exercise), png

attached. It's hung part way through the scripts on rt though. I'll be

looking into that, but in case you prefer to look at this version, it is

available here:

http://dvhart.com/darren/linux/prio-wake-min.c

--

Darren

$ cat trace-prio-wake #!/bin/sh

LOG=$1

shift

echo 1 > /debug/tracing/tracing_enabled

echo 0 > /debug/tracing/tracing_on

echo nop > /debug/tracing/current_tracer

echo 1 > /debug/tracing/events/sched/enable

echo 1 > /debug/tracing/options/latency-format

echo '' > /debug/tracing/trace

echo 1 > /debug/tracing/tracing_on

#/usr/bin/trace-cmd -s ./prio-wake $@

./prio-wake $@ 2>/dev/null

echo 0 > /debug/tracing/tracing_on

cat /debug/tracing/trace > $LOG.lat

echo 0 > /debug/tracing/options/latency-format

cat /debug/tracing/trace > $LOG.log

I run this script from another script which it 100 times each for all 4

combinations of with and without Requeue PI and with and without the

mutex locked across the pthread_cond_broadcast call:

$ cat gen_traces.sh

#!/bin/sh

# Generate 100 traces for each of 4 configurations

RQPI_LIB_PATH=/test/dvhart/lib/x86_64

ITERS=100

for ((i=0;i<ITERS;i++)); do

./trace-prio-wake norqpi-locked-$i

./trace-prio-wake norqpi-unlocked-$i -l0

LD_LIBRARY_PATH=$RQPI_LIB_PATH ./trace-prio-wake rqpi-locked-$i

LD_LIBRARY_PATH=$RQPI_LIB_PATH ./trace-prio-wake rqpi-unlocked-$i -l0

done

egrep -c "prio-wake.*wakeup:.*prio-wake" rqpi-unlocked*.log | sed -e

"s/.*://" > rqpi-unlocked.dat

egrep -c "prio-wake.*wakeup:.*prio-wake" rqpi-locked*.log | sed -e

"s/.*://" > rqpi-locked.dat

egrep -c "prio-wake.*wakeup:.*prio-wake" norqpi-locked*.log | sed -e

"s/.*://" > norqpi-locked.dat

egrep -c "prio-wake.*wakeup:.*prio-wake" norqpi-unlocked*.log | sed -e

"s/.*://" > norqpi-unlocked.dat

The 4 egrep commands generate the dat files I used in the attached

plots. They attempt to eliminate external scheduler events (those

caused by kernel threads preempting the test case for example, as that

is assumed to be irrelevant to the impact of requeue PI on this test. I

am only considering wakeups of the test threads that are caused by other

threads of the test. This should include all FUTEX operations,

including the cond_wait, cond_broadcast, and all PI mutex_lock and

unlock() calls. As such, I don't know what would cause the average to

jump from 17 to 40 on rt, as I wouldn't expect the test itself to be

doing any additional wakeups even if the kernel threads are preempting

the the test threads more frequently on RT.

I have also uploaded a single log for one run on rt with the mutex

locked (50th iteration with 46 wakeups reported by the egrep filter),

you can apply the egrep above to determine how the filter affects it.

For context, I had instrumented the futex operations with some

trace_printk's. Those will illustrate the key points in the test case:

FUTEX_WAIT_REQUEUE_PI and FUTEX_REQUEUE. That can be found here:

http://dvhart.com/darren/linux/rqpi-locked-50.log

o Do my data collection methods look sane?

o Does my definition of "wakeup" make sense?

o Does the jump from 17 to 40 wakeups going from linus to rt surprise

anyone else?

Thanks,

--

Darren Hart

IBM Linux Technology Center

Real-Time Linux Team